Tutorial: Assembling the Gaffer Bot¶

In this tutorial, we will give you a first taste of Gaffer by covering its basic operations and concepts. The goal is for you to learn to make renders of a basic scene as quickly as possible, and provide a minimal basis to further explore the application and its documentation. It will cover a lot of ground quickly, and some details will be glossed over.

By the end of this tutorial you will have built a basic scene with Gaffer’s robot mascot, Gaffy, and render an output image. You will learn the following lessons, not necessarily in this order:

- Gaffer UI fundamentals

- Creating and connecting nodes

- Importing geometry

- Constructing a basic scene hierarchy

- Importing textures

- Building a simple shader

- Applying a shader to geometry

- Adding a light

- Using an interactive renderer

Note

This tutorial uses Appleseed, a free renderer included with Gaffer. While the Appleseed-specific nodes described here can be substituted with equivalents from Arnold or 3Delight, we recommend that you complete this tutorial using Appleseed before moving on to your preferred renderer.

Starting a new node graph¶

After installing Gaffer, launch Gaffer from its directory or by using the “gaffer” command. Gaffer will start, and you will be presented with an empty node graph in the default UI layout.

Importing a geometry scene cache¶

As Gaffer is a tool primarily designed for lookdev, lighting, and VFX process automation, we expect that your sequence’s modelling and animation will be created in an external tool like Maya, and then imported into Gaffer as a geometry/animation cache. Gaffer supports Alembic (.abc) and USD (.usdc and .usda) file formats, as well as its own native SceneCache (.scc) file format. Most scenes begin by importing geometry or images via one of the two types of Reader nodes: SceneReader or ImageReader.

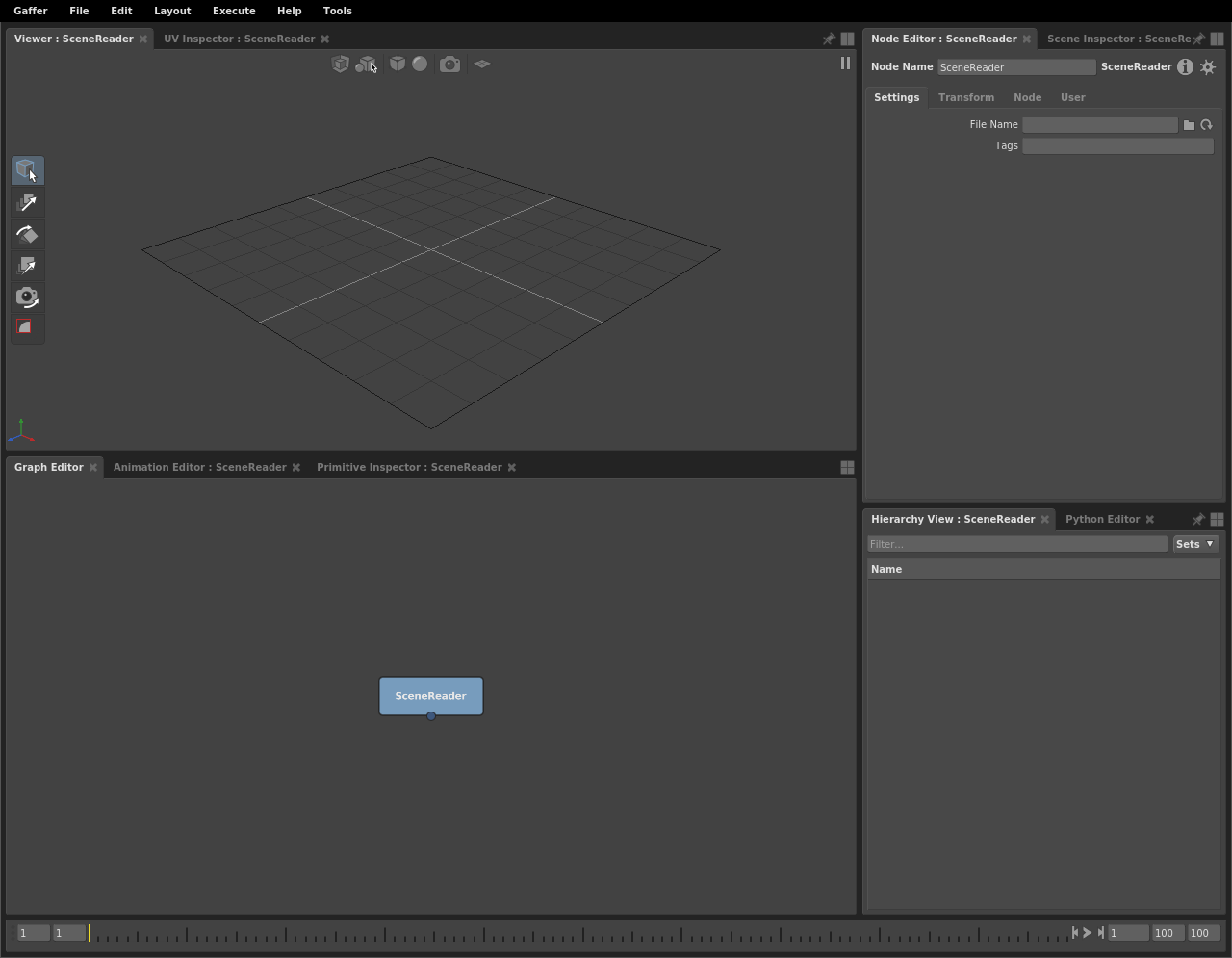

First, load Gaffy’s geometry cache with a SceneReader node:

In the Graph Editor in the bottom-left panel, right-click. The node creation menu will appear.

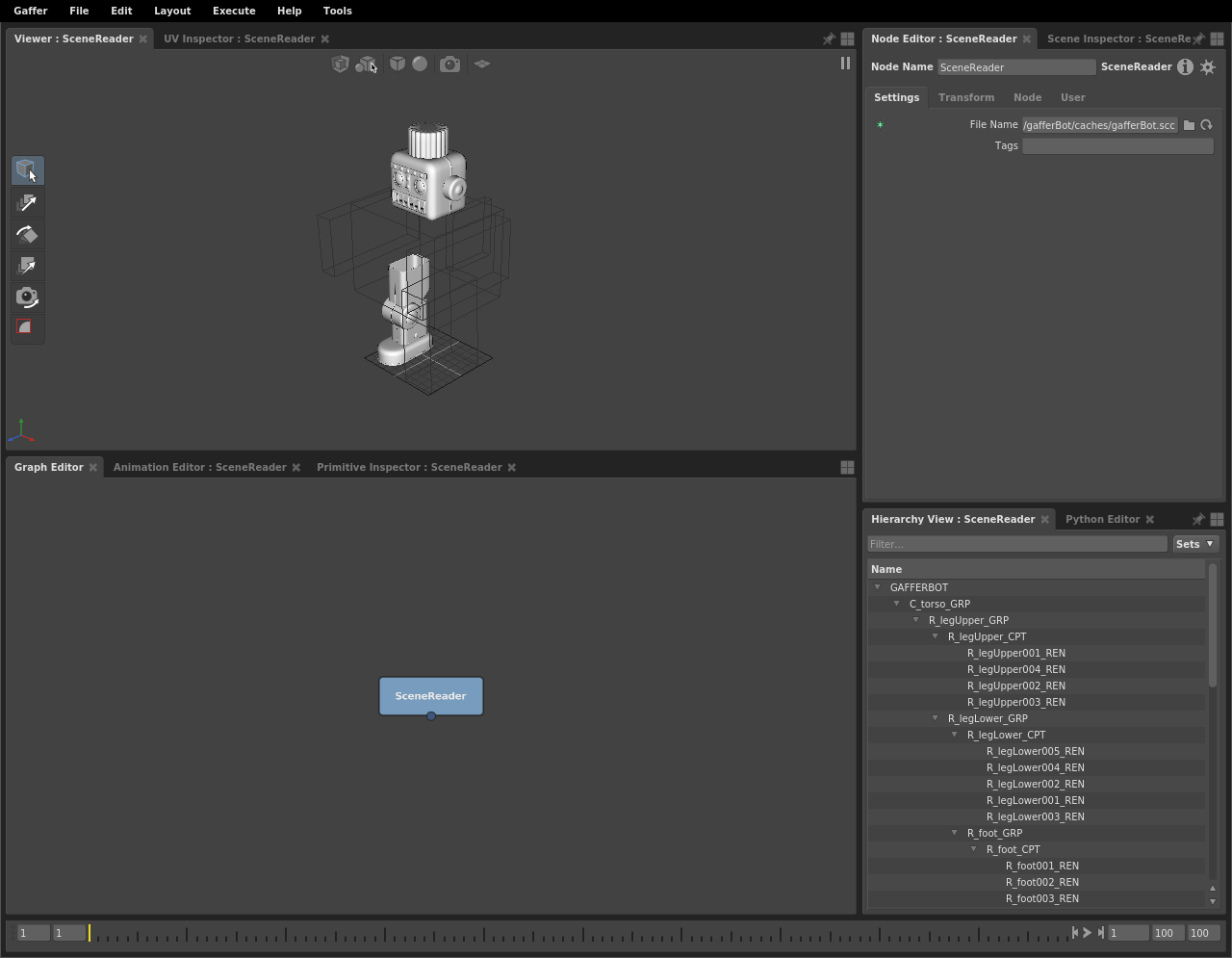

Select Scene > File > Reader. The SceneReader node will appear in the Graph Editor and be selected automatically.

The Node Editor in the top-right panel has now updated to display the SceneReader node’s values. In the File Name field, type

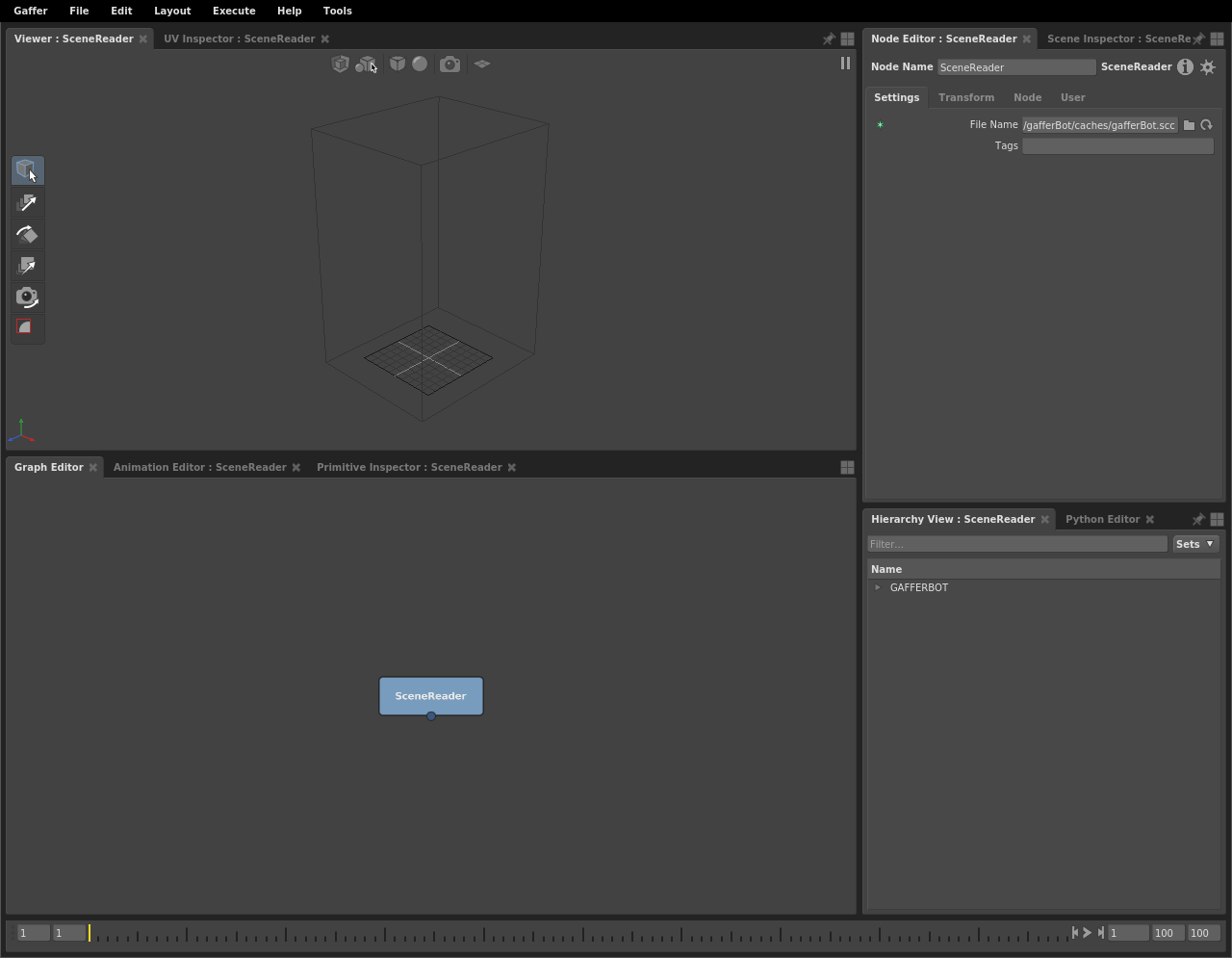

${GAFFER_ROOT}/resources/gafferBot/caches/gafferBot.scc.Hover the cursor over the background of the Viewer (in the top-left panel), and hit F. The view will reframe to cover the whole scene.

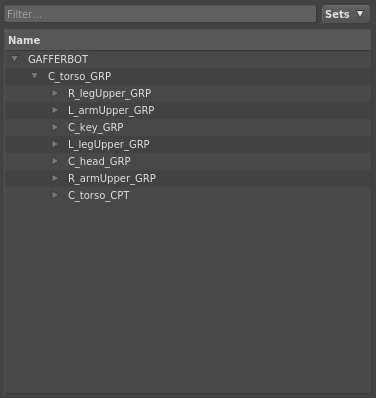

The SceneReader node has loaded, and the Viewer is showing a bounding box, but the geometry remains invisible. You can confirm that the scene has loaded by examining the Hierarchy View in the bottom-right panel. It too has updated, and shows that you have GAFFERBOT at the root of the scene. In order to view the geometry, you will need to expand the scene’s locations down to their leaves.

Important

By default, the Viewer, Node Editor, and Hierarchy View update to reflect the last selected node, and go blank when no node is selected.

The scene hierarchy¶

When you load a geometry cache, Gaffer only reads its 3D data: at no point does it write to the file. This lets you manipulate the scene without risk to the file.

Important

3D data in Gaffer can be non-destructively hidden, added to, changed, and deleted.

Further, Gaffer uses locations in the scene hierarchy to selectively render the objects (geometry, cameras) you need: in the Viewer, the objects of expanded locations will show, while the objects of collapsed locations will only appear as a bounding box. This on-demand object loading allows Gaffer to handle highly complex scenes. In your current node graph, only Gaffy’s bounding box is visible in the Viewer.

Important

Only objects that have their parent locations expanded will appear in full in the Viewer. Objects with collapsed parent locations will appear as a bounding box.

Adjusting the view in the Viewer¶

Like in other 3D tools, you can adjust the angle, field of view, and the position of the virtual camera in the Viewer.

- To rotate/tumble, Alt + click and drag.

- To zoom/dolly in and out, Alt + right click and drag, or scroll the middle mouse.

- To track from side to side, Alt + middle click and drag.

Tip

If you lose sight of the scene and cannot find your place again, you can always refocus on the currently selected location by hovering the cursor over the Viewer and hitting F.

Creating a camera¶

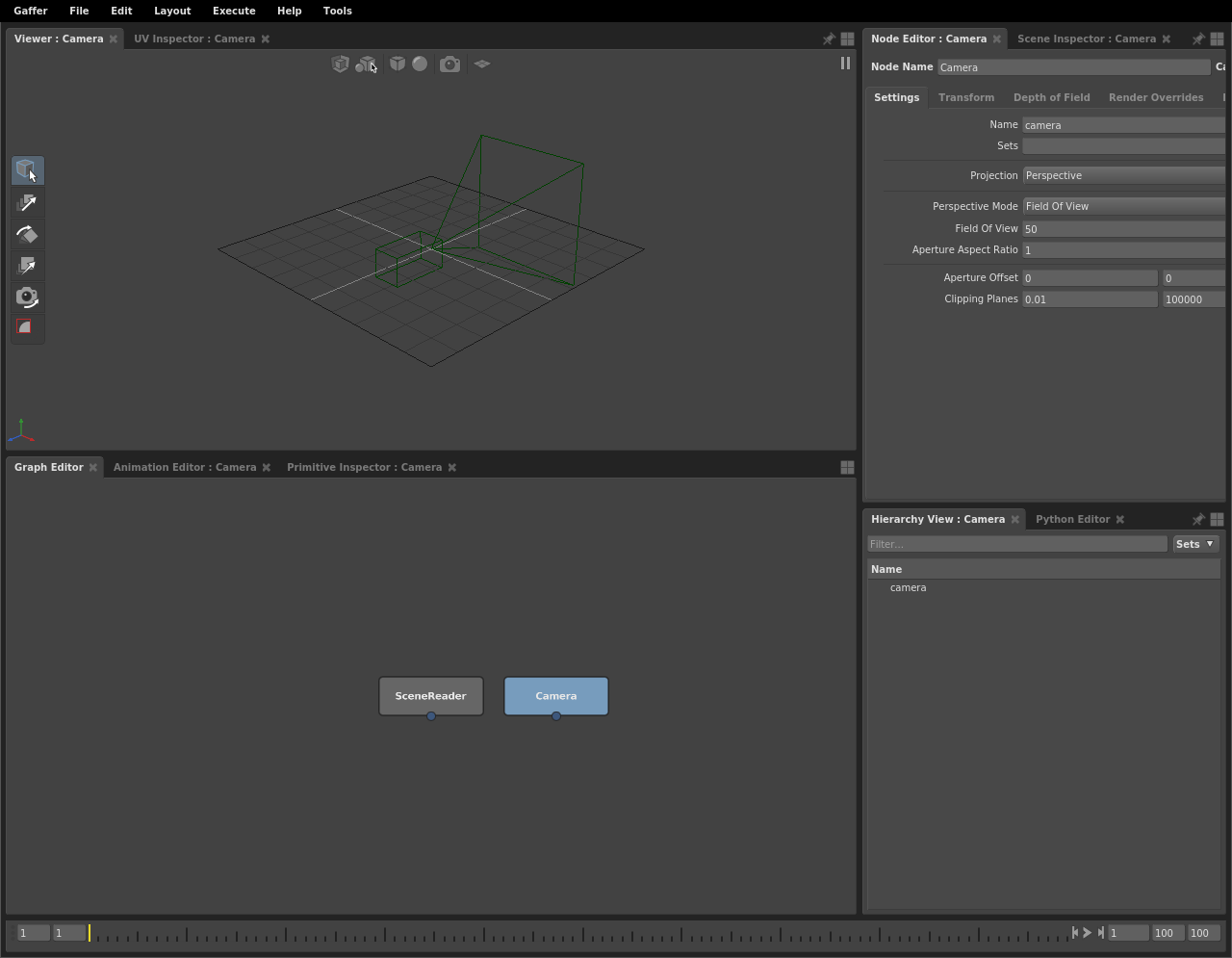

Before you can begin rendering the scene, you will need to create a camera. Just like how you created a SceneReader node to load in the geometry, you will create another node to add a camera.

Earlier, you learned how to create a node by navigating the node creation menu in the Graph Editor. If you know the name of the node you wish to create, and do not feel like mousing through the menu, you can instead use the menu’s search feature:

Right-click the background of the Graph Editor. The node creation menu will open.

Tip

With the cursor hovering over the Graph Editor, you can also hit Tab to open the node creation menu.

Type

camera. A list of search results will appear. Camera should be highlighted.Hit Enter. A Camera node will appear in the Graph Editor.

As before, the newly created node will be selected automatically, and the Viewer, Hierarchy View, and Node Editor will update to reflect this new selection.

Node data flow¶

So far, your graph is as such: the SceneReader node is outputting a scene with Gaffy’s geometry, and the Camera node is outputting a camera object. In fact, the Camera node is outputting a whole scene containing a camera object. As such, any node that sends or receives scene data is classified as a scene node. This paradigm may be a bit confusing compared to other DCCs, but it is one of Gaffer’s strengths.

Important

When a scene node is queried, such as when you select it, it will dynamically compute a scene based on the input values and data it is provided.

Scenes¶

In Gaffer, there is no single data set of locations and properties that comprise the sequence’s scene. In fact, a Gaffer graph is not limited to a single scene. Instead, when a node is queried, a scene is dynamically computed as that node’s output. You can test this by clicking the background of the Graph Editor. With no node selected, the Hierarchy View goes blank, because there is no longer a scene requiring computation. Thus, no scene exists. Since scenes are computed only when needed, Gaffer has the flexibility to support an arbitrary quantity of them.

Since neither of your nodes is connected, each of their scenes remains separate. If you were to evaluate your graph right now, it would calculate two independent scenes. For them to interface, they must be joined somewhere later in the graph.

Important

In the Graph Editor, node data flows from top to bottom.

When a scene node computes a scene, scene data passes through it like so:

- Data flows into the node through its input(s).

- If applicable, the node modifies the data.

- The node computes the resulting scene data and sends it through its output.

Plugs¶

The inputs and outputs of a node are called plugs, and are represented in the Graph Editor as colored circles around the node’s edges.

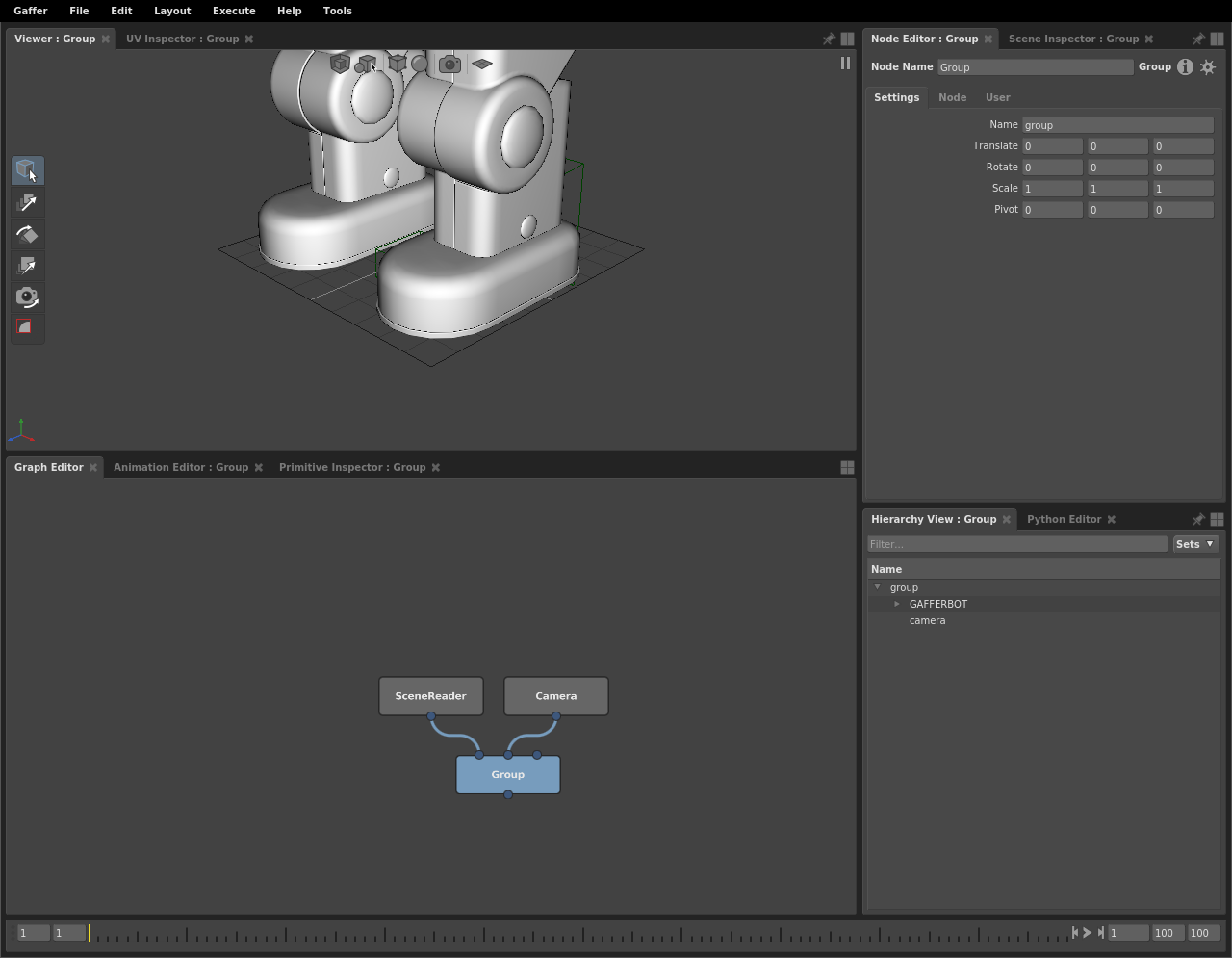

For your two nodes to occupy the same scene (and later render together), you will need to combine them into a single scene. You can connect both of their output plugs to a Group node, and you can also rearrange the nodes to better visually represent the data flow in the graph.

Connecting plugs¶

It’s time to connect the SceneReader and Camera nodes to combine their scenes:

- Click the background of the Graph Editor to deselect all nodes.

- Create a Group node (Scene > Hierarchy > Group).

- Click and drag the SceneReader node’s out plug (at the bottom; blue) onto the Group node’s in0 plug (at the top; also blue). As you drag, a node connector will appear. Once you connect them, a second input plug (in1) will appear on the Group node, next to the first.

- Click and drag the Camera node’s out plug onto the Group node’s in1 plug.

The Group node is now computing a new scene combining the input scenes from the two nodes above it, under a new parent scene location called group. You can see this new hierarchy by selecting the Group node and examining the Hierarchy View.

Only the combined scene computed by the Group node is modified. The upstream nodes’ scenes are unaffected. You can verify this by reselecting one of them and checking the Hierarchy View.

Positioning the camera¶

Next, you should reposition the camera so that it frames Gaffy. You can accomplish this using the built-in 3D manipulation tools in the Viewer.

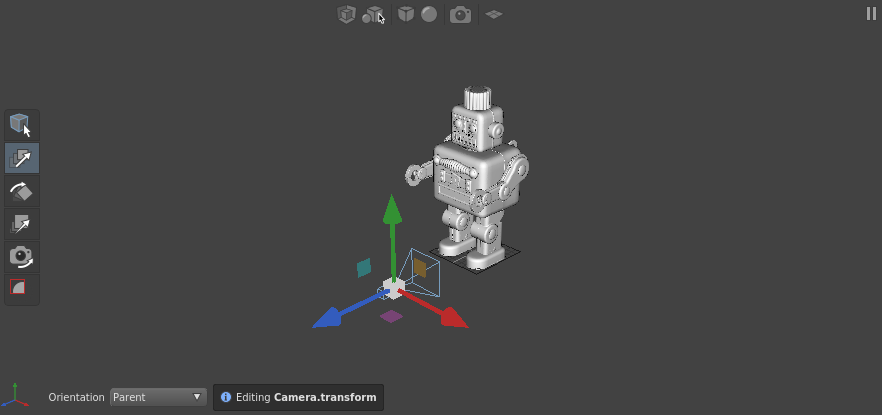

First, set the camera’s position using the TranslateTool:

Select the Group node in the Graph Editor.

Fully expand all scene locations by Shift + clicking

next to group in the Hierarchy View.

next to group in the Hierarchy View.Click the camera object in the Viewer, or select the camera location in the Hierarchy View.

In the Viewer, click

. The translation manipulators will appear on the camera.

. The translation manipulators will appear on the camera.Using the manipulators, adjust the camera back and up.

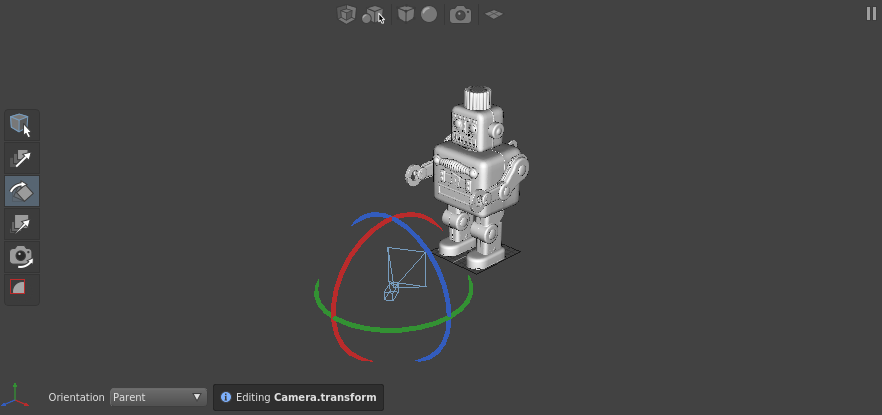

Next, rotate the camera using the RotateTool:

- In the Viewer, click

. The rotation manipulators will appear around the camera.

. The rotation manipulators will appear around the camera. - Using the manipulators, rotate the camera so it points at Gaffy.

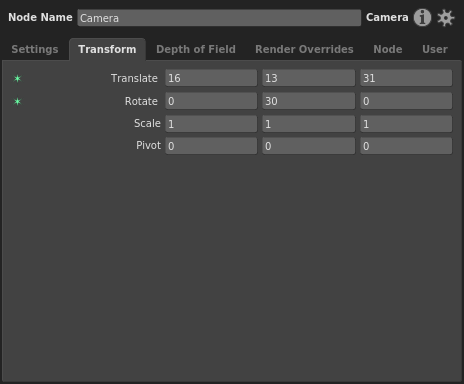

More precise camera adjustment¶

For more precise positioning and rotation, you can set the Translate and Rotate values in the Transform tab of the Node Editor:

Important

In the prior section Connecting Plugs, we referred to the main inputs and outputs of a node as plugs. In actuality, all the values you see in the Node Editor, including the camera’s transform, are plugs. For ease of use, only a subset of a node’s available plugs appear in the Graph Editor.

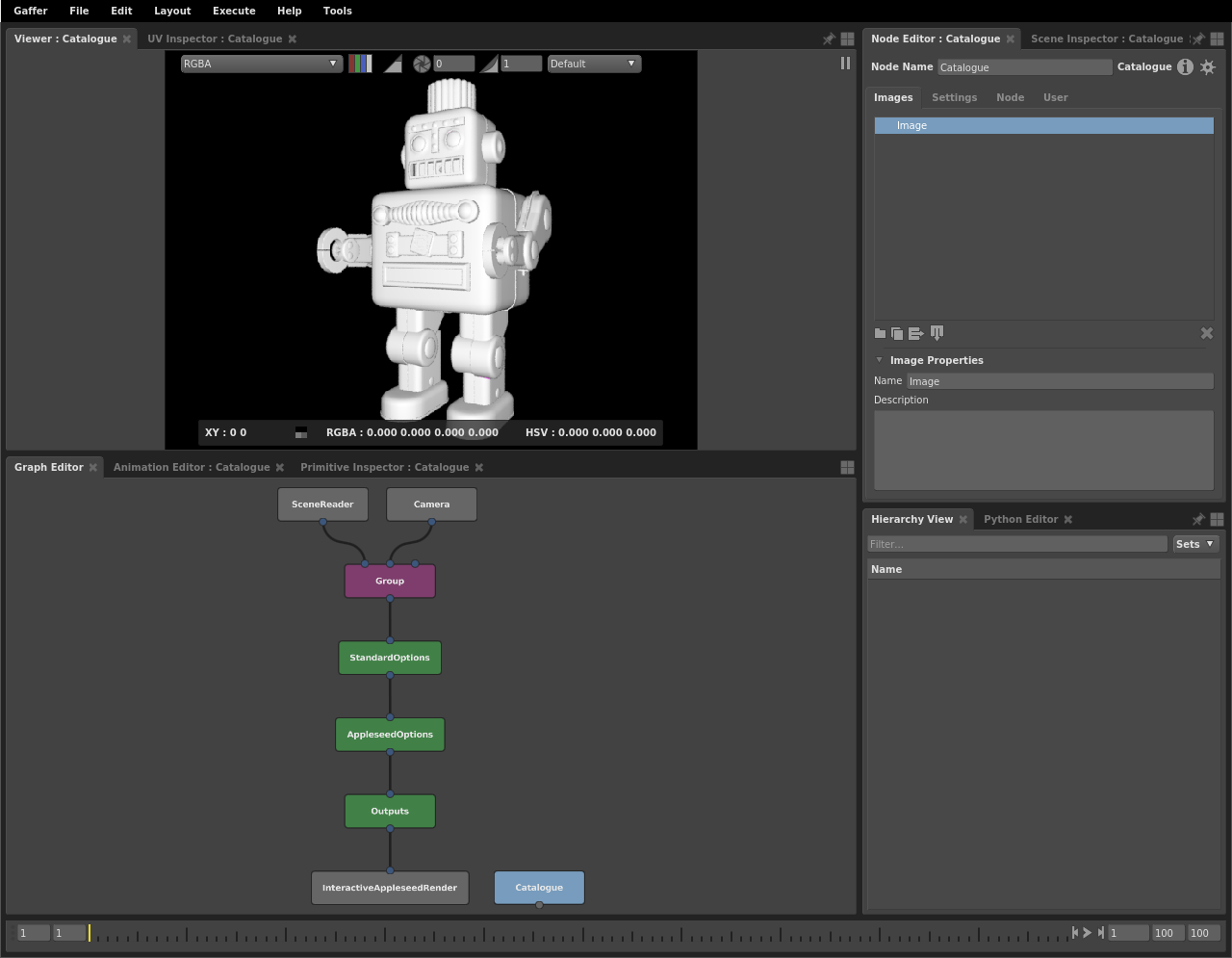

Rendering your first image¶

Now that you have defined the layout of your scene, you should perform a quick test-render to check that everything is working as expected. In order to do that, you need to place some render-related nodes to define your graph’s render settings.

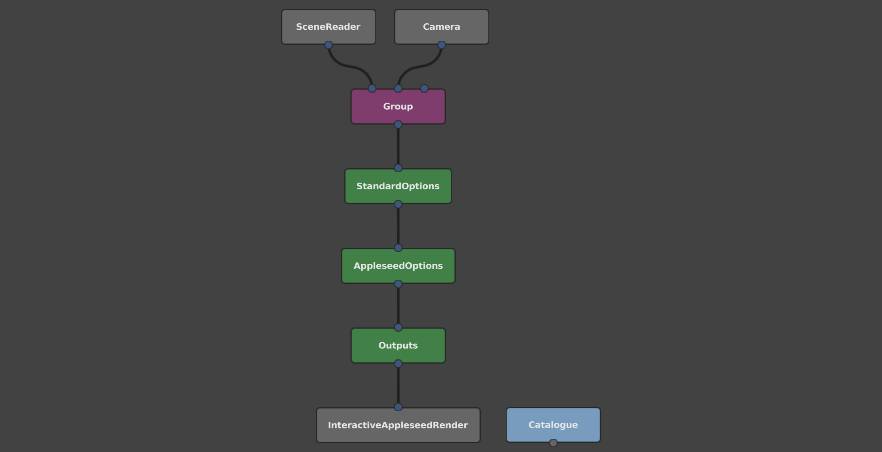

Create the render settings nodes:

First, select the Group node. Now, the next scene node you create will automatically connect to it.

Then, create the following nodes in sequence:

- StandardOptions (Scene > Globals > StandardOptions): Determines the camera, resolution, and blur settings of the scene.

- AppleseedOptions (Appleseed > Options): Determines the settings of the Appleseed renderer.

- Outputs (Scene > Globals > Outputs): Determines what kind of output image will be created by the renderer.

- InteractiveAppleseedRender (Appleseed > InteractiveRender): An instance of Appleseed’s progressive renderer.

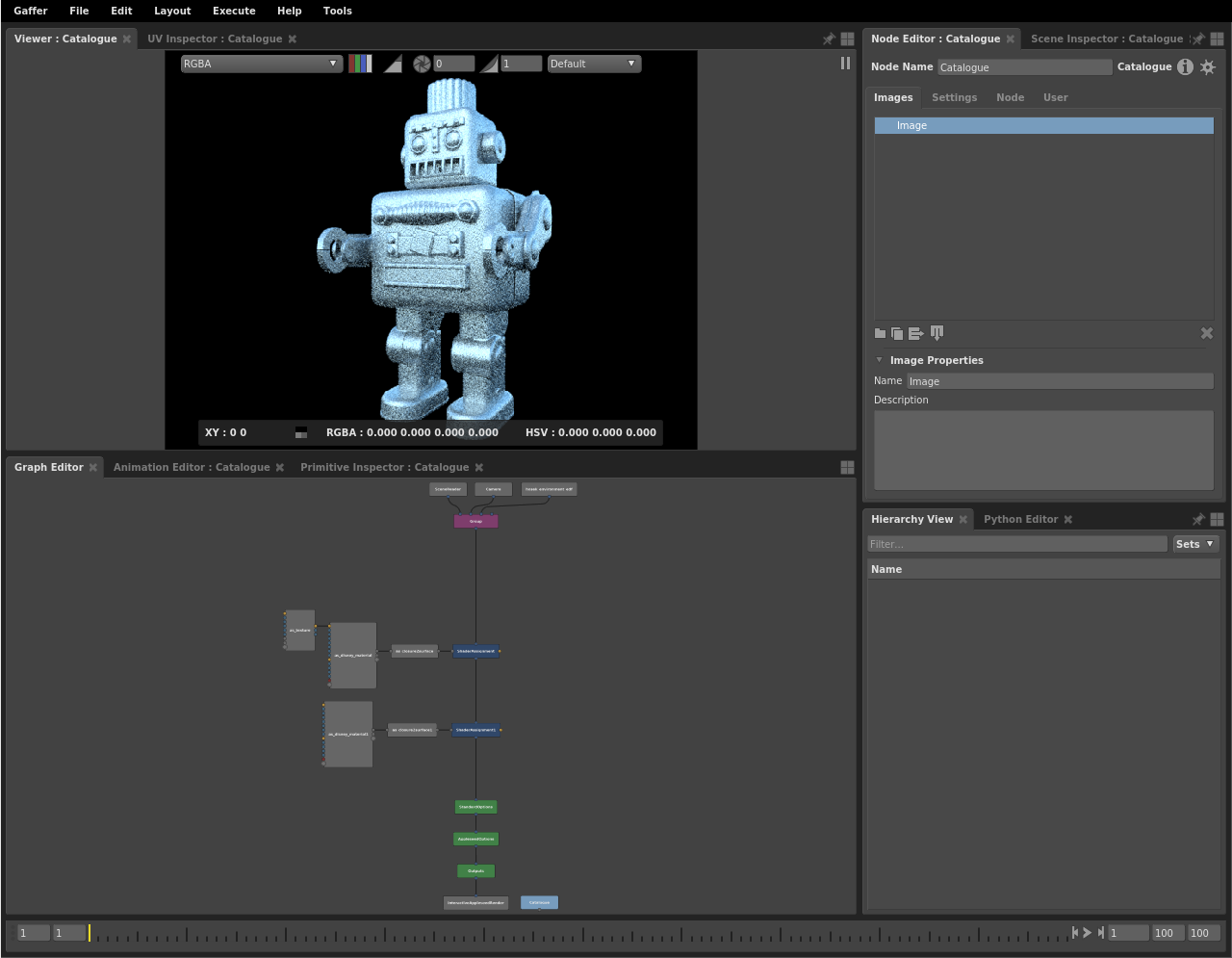

Finally, create a Catalogue node (Image > Utility > Catalogue). This is an image node for listing and displaying a directory of images in the Viewer. By default, it points to the default output directory of your graph’s rendered images. Place it next to the InteractiveAppleseedRender node.

Note

The Catalogue node is not a scene node, but an image node. It cannot be connected to a scene node.

In keeping with what we said earlier about nodes passing scenes to other nodes: each of the render nodes only applies to the scene delivered to it through its input plugs. If you had another unconnected scene running in parallel, none of these render properties would apply to it.

Although the scene contains a camera, you will need to tell the StandardOptions node to set it as a global property of the scene:

- Select the StandardOptions node.

- Specify the camera using the Node Editor:

- Click the Camera section to expand it.

- Toggle the switch next to the Camera plug to enable it.

- Type

/group/camerainto the plug’s field.

Next, you need to add an image type to render:

- Select the Outputs node.

- In the Node Editor, click

and select Interactive > Beauty from the drop-down menu.

and select Interactive > Beauty from the drop-down menu.

With all the settings complete, start the interactive renderer:

Select the InteractiveAppleseedRender node in the Graph Editor.

In the Node Editor, click

to start the renderer.

to start the renderer.Select the Catalogue node.

Hover the cursor over the Viewer and hit F to frame the Catalogue node’s live image of the interactive render.

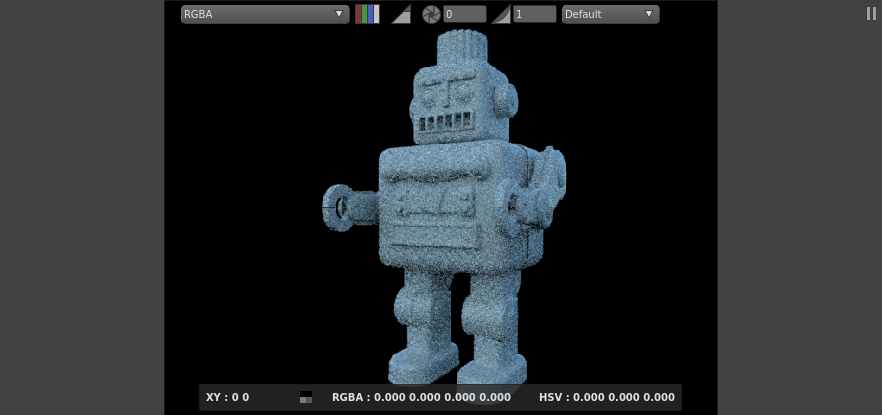

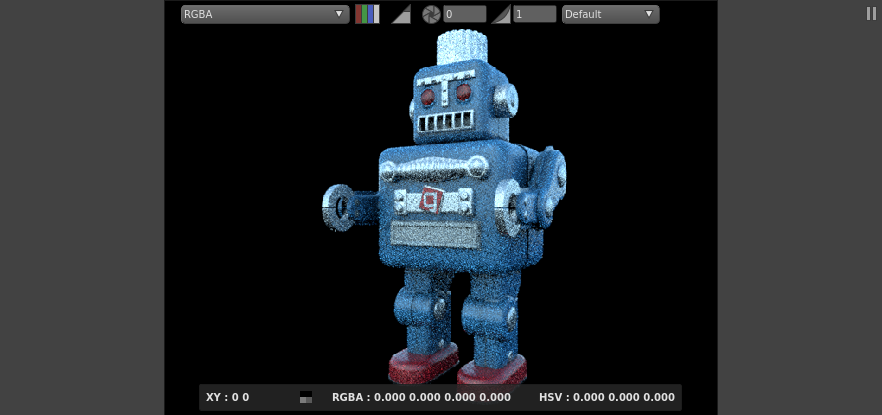

Congratulations! You have successfully rendered your first image. Gaffy is currently lacking shading, lighting, and texturing. We will move on to those soon. First, you should adjust the UI to provide yourself a more optimal workflow.

Pinning an editor to a node¶

As mentioned earlier, the Viewer, Hierarchy View, and Node Editor (each an editor) show their respective outputs of the currently selected node. This is not always convenient, because often you will need to edit one node while viewing the output of another. You can solve this by pinning an editor while a node is selected, which keeps that editor focused on the node.

To make switching between viewing Gaffy’s geometry and the render easier, you can modify the UI so you can work with two Viewers. First, start by pinning the last node in the graph that contains the scene:

- Select the InteractiveAppleseedRender node.

- Click

at the top-right

of the top panel. The pin button will highlight:

at the top-right

of the top panel. The pin button will highlight:  .

.

The Viewer is now locked to the InteractiveAppleseedRender node’s scene (which contains all of the parts from its upstream scenes), and will only show that scene, even if you deselect it or select a different node.

Next, pin the same node to the Hierarchy View. This time, use a shortcut:

- Select the InteractiveAppleseedRender node.

- Middle-click and drag the InteractiveAppleseedRender node from the Graph Editor into the Hierarchy View.

As with the Viewer, the Hierarchy View will now remain locked to the output of InteractiveAppleseedRender, regardless of your selection. Now you are free to select any node for editing in the Node Editor, but you will always be viewing the final results of the last node in the graph.

For the final adjustment to the UI, create another Viewer in the top-left panel, and pin the Catalogue node to it:

- At the top-right of the top panel, click

to open the layout menu.

to open the layout menu. - Select Viewer. A new Viewer will appear on the panel next to the first one.

- Middle-click and drag the Catalogue node onto the new Viewer. That Viewer is now pinned to that node.

Now you can switch between the scene’s geometry (first Viewer) and the rendered image (second Viewer).

Now it is time to shade Gaffy.

Adding shaders and lighting¶

It’s time to add shaders and lighting. Lights are created at their own location, and can be added anywhere in the graph. For efficiency, shaders should be added to the geometry as early as possible.

Making some space¶

It will be best if you keep the render option nodes at the end of the graph. Since you will be adding shader nodes after the scene nodes, first add some space in the middle of the graph:

Select the lower five nodes by clicking and dragging a marquee over them.

Click and drag the nodes to move thme to a lower position in the graph.

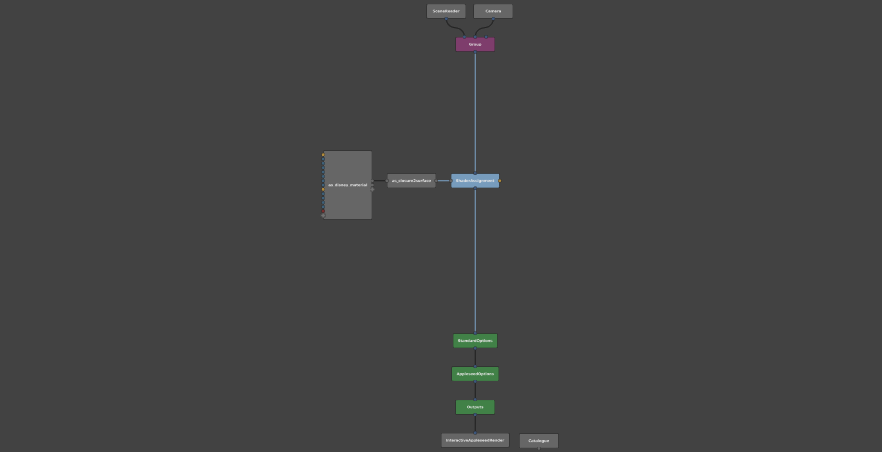

Adding a shader¶

Now that you have more space, it’s time to add some shading nodes:

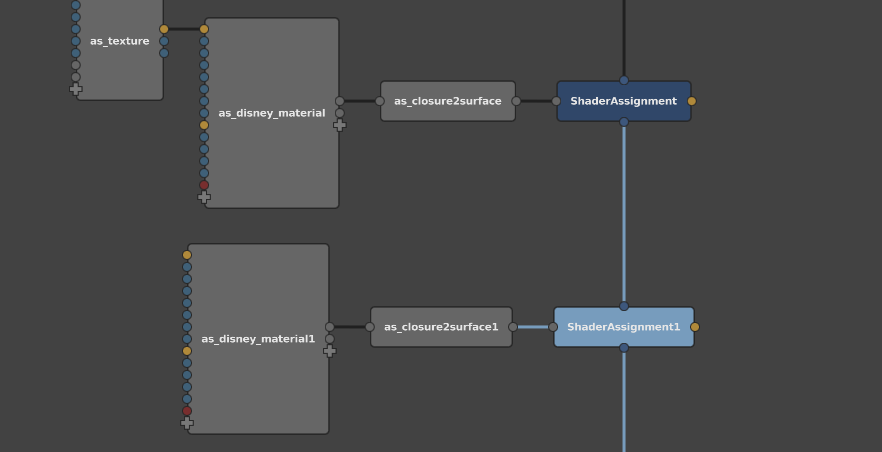

Below and to the left of the Group node, create a Closure2Surface node (Appleseed > Shader > Surface > As_Closure2Surface).

Create a Disney Shader node (Appleseed > Shader > Shader > As_Disney_Material).

In the Node Editor, give the shader reflective surface properties:

- Set the Specular Weight plug to

0.6. - Set the Surface Roughness plug to

0.35.

- Set the Specular Weight plug to

Connect the outColor plug of the Disney Shader node to the input plug of the Closure2Surface node.

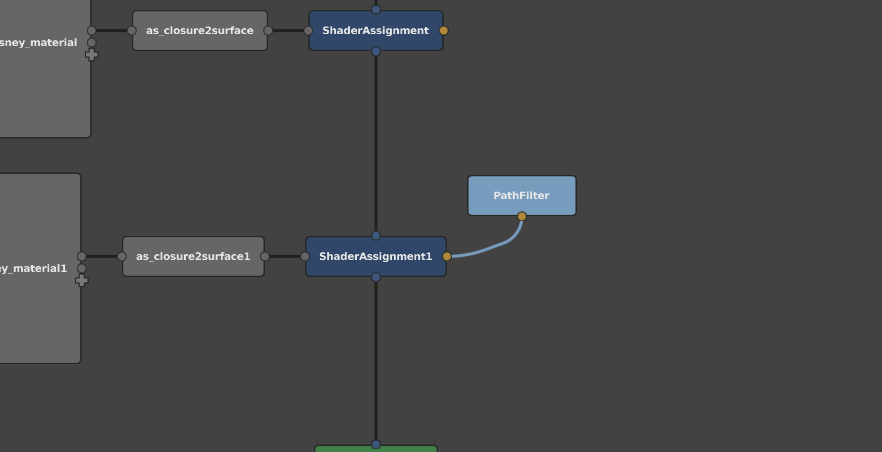

Select the Closure2Surface node and create a ShaderAssignment node (Scene > Attributes > ShaderAssignment).

Click and drag the ShaderAssignment node onto the connector between the Group and StandardOptions nodes. The ShaderAssignment node will be interjected between them.

Important

In the Graph Editor, shader data flows from left to right.

In your newly shaded graph, the ShaderAssignment node takes the shader flowing in from the left and assigns it to Gaffy’s scene flowing in from the top. Now that Gaffy has received a proper shader, the whole image has turned black, because the scene currently lacks lighting.

Adding an environment light¶

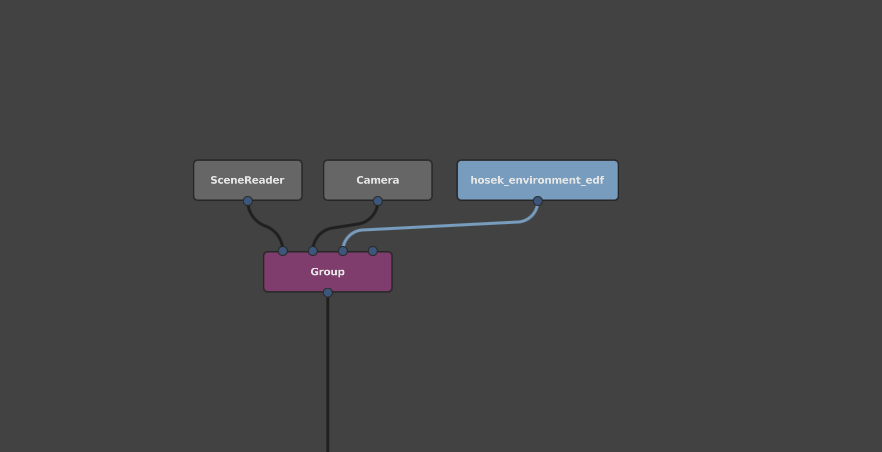

For lights to take effect, they need to be combined with the main scene. For simplicity, use a global environment light:

- Create a PhysicalSky node (Appleseed > Environment > PhysicalSky).

- Place it next to the Camera node in the Graph Editor.

- In the Node Editor, adjust the node’s angle and brightness plugs:

- Set the Sun Phi Angle plug to

100. - Set the Luminance plug to

2.5.

- Set the Sun Phi Angle plug to

- Connect the PhysicalSky node’s out plug to the Group node’s in3 plug.

For the light to take effect, you will need to assign it:

- Select the AppleseedOptions node.

- In the Node Editor, expand the Environment section.

- Toggle the switch next to the Environment Light plug to enable it.

- Type

/group/lightinto the plug’s field.

The interactive render will now begin updating, and you will be able to see Gaffy with some basic shaders and lighting.

Adding textures¶

As Gaffy is looking a bit bland, you should drive the shader with some robot textures:

Create an Appleseed Color Texture node (Appleseed > Shader > Texture2d > asTexture).

In the Node Editor, point the node to the textures:

- For the Filename plug, type

${GAFFER_ROOT}/resources/gafferBot/textures/base_COL/base_COL_<UDIM>.tx.

- For the Filename plug, type

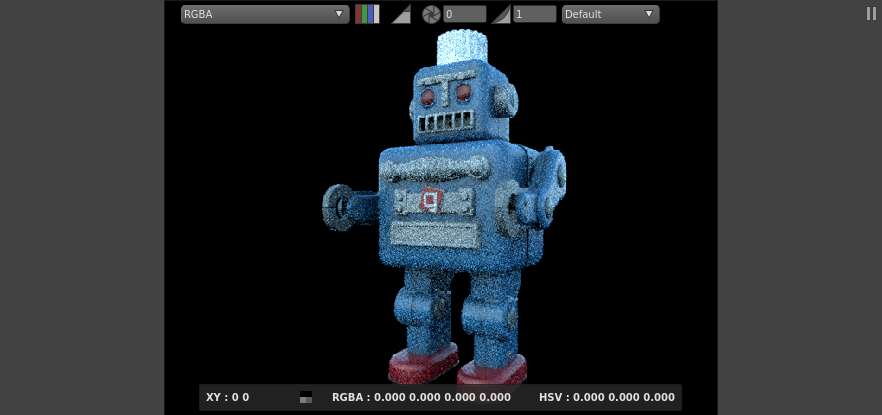

In the Graph Editor, connect the Appleseed color texture node’s OutputColor plug to the Disney Material node’s SurfaceColor plug. Gaffy’s textures will now drive the color of the surface shader, and the render will update to show the combined results.

Adding another shader¶

Right now, the physical surface of all of Gaffy’s geometry looks the same, because the shader is applying to every component of the geometry. To fix this, you should create an additional metallic shader and apply it selectively to different locations.

Begin by creating another shader:

Create another pair of Disney Surface shader and Closure2Surface nodes.

Connect the outColor plug of the Disney Shader node to the input plug of the Closure2Surface node.

Give the shader some metallic properties:

- Set Metallicness to

0.8. - Set Surface Roughness to

0.4.

- Set Metallicness to

Select the Closure2Surface and create another ShaderAssignment node.

Interject the new ShaderAssignment node after the first ShaderAssignment node.

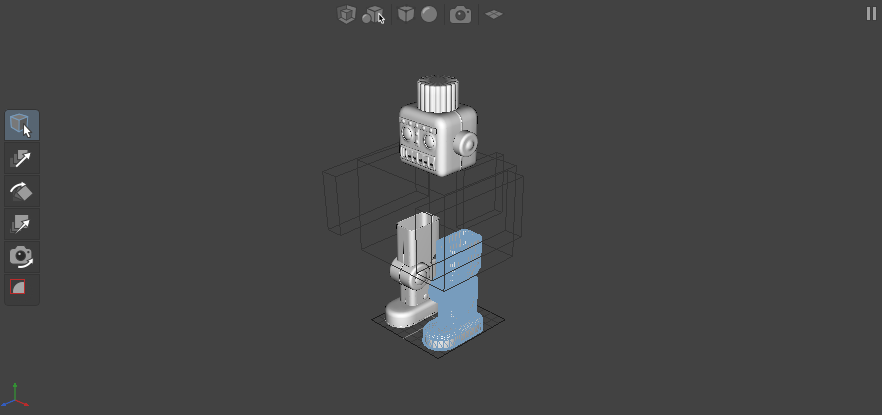

The Viewer will update to show the new shader.

You will immediately notice something that now all the geometry is metallic. The new shader has overridden the previous one.

Important

The last ShaderAssignment node applied to a location takes precedence over any others.

Filtering a shader¶

In order to selectively apply a shader to only certain locations in the scene, you will need to filter the shader assignment, using a filter node that points to specific locations in the scene:

Create a PathFilter node (Scene > Filters > PathFilter).

In the Node Editor, click

next to Paths. This will add a new text field.

next to Paths. This will add a new text field.In the text field, type

/group/GAFFERBOT/C_torso_GRP/C_head_GRP/C_head_CPT/L_ear001_REN. This is the full path to Gaffy’s left ear.Connect the PathFilter node’s out plug to the filter input on the right hand side of the ShaderAssignment1 node’s filter plug (yellow, on the right).

Now when you check the render, you will see that the chrome shader is only applied to Gaffy’s left ear. There are many other parts of Gaffy that could use the chrome treatment, but it would be tedious for you to manually enter multiple locations. Instead, we will demonstrate two easier ways to add locations to the filter: using text wildcards, and interacting directly with the geometry through the Viewer.

Filtering using wildcards¶

Earlier, you used /group/GAFFERBOT/C_torso_GRP/C_head_GRP/C_head_CPT/L_ear001_REN, which only pointed to the left ear. You could apply the filter to both ears by adding wildcards to the /L_ear001_REN location in the path:

- Select the PathFilter node.

- In the Node Editor, double-click the path field you created earlier, and change it to

/group/GAFFERBOT/C_torso_GRP/C_head_GRP/C_head_CPT/*_ear*. The filter will now match the left and the right ears.

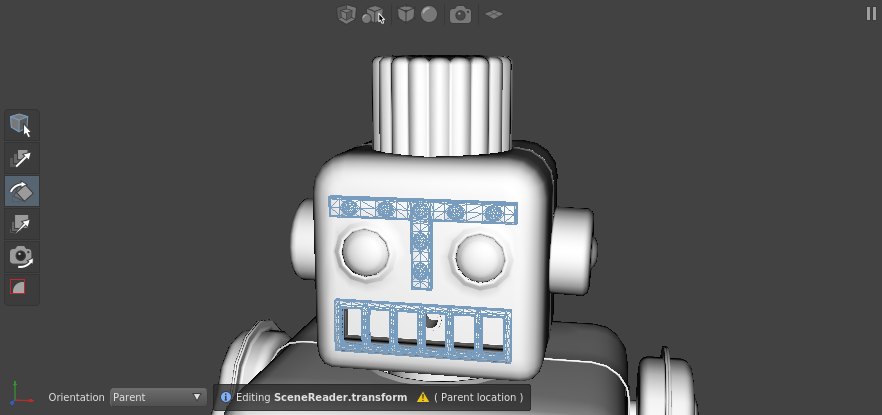

Filtering by dragging selections¶

As your final lesson in this tutorial, add the metallic shader to the rest of the appropriate parts of Gaffy. This time, you can add to the filter by directly interacting with the scene:

In the top panel, switch to the Viewer containing the 3D geometry view.

Zoom and pan to Gaffy’s face.

Click Gaffy’s unibrow.

Shift + click Gaffy’s mouth to add it to the selection.

Click and drag the selection (the cursor will change to

), and hold it over the PathFilter node without releasing.

), and hold it over the PathFilter node without releasing.While still holding the mouse button, hold Shift (the cursor will change to

). You are now adding to the path, rather than replacing it.

). You are now adding to the path, rather than replacing it.Release the selection over the PathFilter node. This will add the locations as new values fields on the plug.

Tip

Just as locations can be added by holding Shift, they can be removed by holding Ctrl (the cursor will change to  ).

).

Add and remove locations to the filter as you see fit. Remember to switch between the two Viewers to check the render output as it updates. After adding Gaffy’s hands and bolts to the filter, you should achieve an image similar to this:

Recap¶

Congratulations! You’ve built and rendered your first scene in Gaffer.

You should now have a basic understanding of Gaffer’s interface, the flow of data in a graph, how to manipulate the scene, and how to add geometry, lights, textures, and shaders.

You should now have a solid basis for further learning and exploration.